How important is economic growth in the long run?

In Stubborn Attachments, Tyler Cowen argues that ensuring high economic growth is perhaps the greatest moral good we can do. People living in the far future ought to be just as (or very nearly as) valuable to us as lives that exist today; humans don't pick when they are born. Thus, we ought to assign a discount rate of zero or very nearly zero to human lives in the far future. This is a point shared by the effective altruist movement and recently articulated by Toby Ord in his book, The Precipice. Cowen also argues that wealth accumulation through economic growth is our most powerful tool for improving human lives. Compounding growth overcomes all sorts of disagreements; if you're wealthier, you're much more able to pursue whatever other values you have.

Moreover, the direct effects of compounded wealth are remarkable, with even the relatively poor citizens of rich countries being fabulously better off than the richest of a century past. Queen Victoria didn't have antibiotics, air travel, or streaming music. As Cowen says, a drop between a single percentage point in annual growth from 1870 to 1990 would have resulted in the 1990 United States having the same standard of living as Mexico. The better we can ensure sustainable growth today, the better off humanity will be in the future, and given a zero discount rate, we have a moral obligation to make sustainable economic growth as high as possible.

However, an EA Forum post (by excellent Twitter follow @kylebogosian) makes the important counterpoint that if past trends in long term economic growth continue forward, the next century could see a radical increase the growth rate, then our "short term" meddling with getting economic growth from 2% to 4% may have little long run effect. For example, a rapid improvement in artificial intelligence could drive a revolution in growth broader than the industrial revolution. It might make sense for EA work to focus on ensuring an AI revolution went smoothly (e.g. make sure a cold war in AI military weapons didn't result in a catastrophic war) rather than focus on economic growth broadly. This is an important crux we must answer if we want to know where to best focus our efforts. Will economic growth ramp up out of control due to technological advances without our help? Perhaps restraint of technological progress is warranted to avoid a catastrophic event? Or instead is economic growth fragile and endangered, needing strong investment and protection to ensure our long run descendants are well off?

I'm going to first touch briefly on the research that the EA forum post is relying on and why I don't think it means we ought to update much on priors about the importance of economic growth and then proceed to discuss some approaches that would answer our question better.

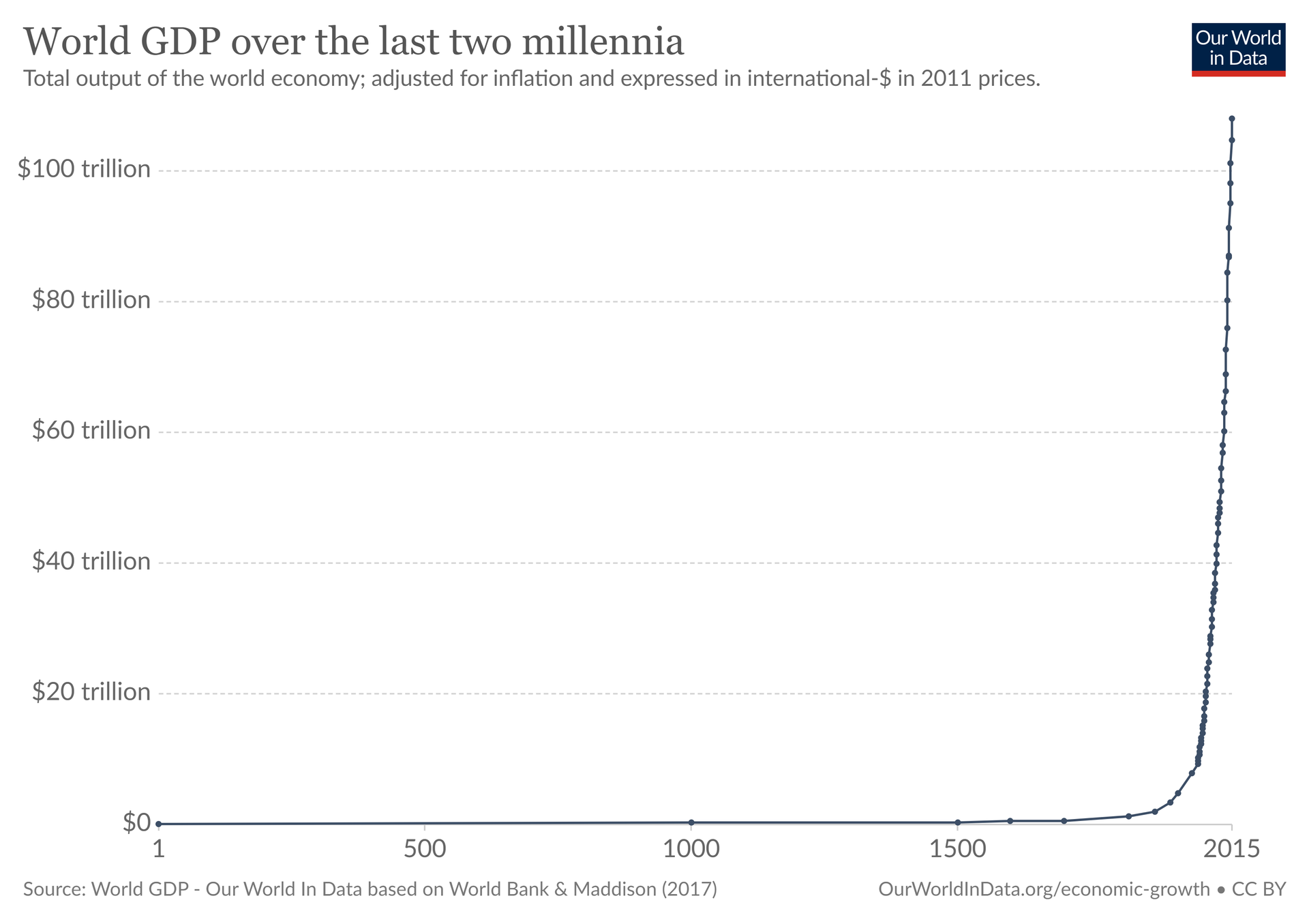

Historical Superexponential Growth

David Roodman has an article at the Open Philanthropy Project (cited in the forum post), also starting from a view valuing the long term future of humanity. He argues from an "outside view" of economics which would challenge the more typical exponential growth models used by most economists. Roodman creates a trajectory based on historical economic productivity data which posits that humanity will see increasing rates of productivity growth, resulting in a spike in economic output (technically the spike would go to infinite but everyone agrees that's not the correct interpretation of the data). Roodman also creates an economic growth model which endogenizes technology to demonstrate why we might be seeing this increasing superexponential historical growth rate.

Roodman spends a lot of time discussing and building stochastic growth mechanisms into his model. That's super cool, but ultimately it's not relevant to our question of whether economic growth will go superexponential, as that is already baked into the model. I'm going to focus on whether the data are actually high enough quality for us to start making decisions on where to focus our efforts on improving the long run future of humanity as well as whether the model he creates is one we should use.

GDP Problems

First, GDP estimates are quite tenuous in general. Roodman says "the estimates contain information" in his paper draft, and I don't think any further praise is warranted. For purposes of taking actions on priorities about the long term future, data used to extrapolate productivity back in time is shaky.

In modern economies with entire government agencies dedicated to recording economic data, we can only measure nominal GDP directly and our calculation of real GDP growth is entirely determined by the GDP deflator. GDP deflators are statistical methods where economists attempt to separate the respective contributions of monetary and productivity growth to increased measured activity. This is related to the Consumer Price Index which also tries to solve this problem by looking at baskets of goods. As noted by many economists (Gabriel Zucman, Russ Roberts, Susan Houseman, Diane Coyle, Michael Boskin), GDP deflators are limited in their usefulness. They underestimate economic growth, since new products come along which can't be compared with older products. For example, smartphones which allow you to browse the internet on the go and stream millions of songs to your device instantaneously didn't exist in 1990, regardless of ability to pay.

The question inflation adjusters seek to answer is "how expensive was this good in the past compared to now" but huge swaths of products didn't exist in the past. Moreover, the further we are from the present, the harder it is to compare productivity since more and more goods simply hadn't been invented yet. This is even mentioned in Brad De Long's 1998 paper (a source of one of Roodman's data sets). Since GDP is bad at measuring goods that weren't invented yet: "So I—somewhat arbitrarily—use this to assign an additional fourfold multiplication to output 5 per capita since 1800 in addition to the increases in output per capita calculated by Maddison." Thus, the challenge of building long term historical GDP data means we should be quite skeptical about turning around and using that data to predict future growth trends. All we're really doing is extrapolating the backwards estimates of some economists forwards. The error bars will be very large.

Growth Model Problems

Roodman's post also explores economic growth models. I think the explanation and application of making technology endogenous to the growth model is really good. Of course, the question is whether technological growth contributions will scale with the level of production. And there is not much written to demonstrate why technology scales with economic productivity level. Relatedly, Daniel Kokotajlo on LessWrong makes a more in-depth case for AI-productivity decoupling.

Moreover the productivity-technology feedback loop is quite suspect given that the best data we have (from the last 70 years) show absolutely no superexponential economic growth. This is a problem for endogenous technology models. As we get closer to the asymptote, we should be seeing obvious increasing technological returns. Instead, the conventional wisdom has been that we are slowing down recently. Cost disease, the great stagnation, 140 characters instead of flying cars.

Why are there all these problems? Well, for one thing, treating the world economy as a continuous system from 10000 BCE to now could be inaccurate. I think there are serious questions about whether or not entire aspects of growth models existed at various times of human history. How did capital accumulation happen, and how was it destroyed? What about human capital and labor? How do we think about labor as a factor if large parts of the population aren't being paid?

Some important parts of economic growth changes came from establishment of specific ideas, like banking sectors and bank notes, liquidity and credit markets, and joint stock corporations. If these count as "technology" (and I think they do), are their invention a result of productivity? Such conclusions are not self-evident. Think about Dungeons and Dragons. It's a tabletop role-playing games that takes place largely in the minds of the players. The only "technology" it requires is paper for recording information, and dice. Why wasn't DnD popular in ancient Rome? If the limit was literacy, why wasn't it popular in the early 1900s developed world? Of course, the mainstream economic model developed by Robert Solow in the 50s took technology as exogenous, and that might not be exactly right either, but it certainly feels that some of technology is exogenous and random.

But if it's possible, or even intuitive, that specific institutions fundamentally changed how economic growth occurred in the past, then it may be a mistake to model global productivity as a continuous system dating back thousands of years. In fact, if you took a look at population growth, a data set that is also long-lived and grows at a high rate, the growth rate fundamentally changed over time. Given the magnitude of systemic economic changes of the past few centuries, modeling the global economy as continuous from 10,000 BCE to now may not give us good predictions. The outside view becomes less useful at this distance.

Technological Impacts

So if this outside view approach isn't the right question, how should we investigate the importance of economic growth versus technological revolutions in our future?

One important question is how likely artificial intelligence will be the source of any technological feedback mechanisms compared to other technologies. I agree with most of the LessWrong-adjacent community that AI seems to be the most likely source of a revolutionary change, economic growth or otherwise, but it's not the only one. Robin Hanson posits in The Age of Em (for more on it, here's an old discussion I wrote) that the most important revolution of the future will be the uploading and emulation of human minds into software. Minds that can run faster given better hardware could certainly lead to a feedback mechanism of high intelligence or productivity. But so could genetic engineering which seems quite plausible in a brand new age of mRNA vaccines. There is also the promises of quantum computing breakthroughs, nanotechnology, or nuclear fusion. I'm doubtful any particular technology here is more likely than AI breakthroughs, but it's quite plausible that AI progress is much slower than expected, or highly constrained by hardware in unforeseen ways. In such cases, perhaps another one of these technologies causes its own revolution.

Given that, it may be useful to investigate human intelligence genetic enhancement timelines just as we've started to look into AI timelines.

Artificial Intelligence

Additionally, while I do think AI will be a major factor in future economic growth, there's a major difference between a revolution in economic output and "previous economic growth is no longer a factor". If the AI revolution is something like a "hard takeoff" scenario as posited by Bostrom, then perhaps trying to worry about any economic growth today is silly. That could happen, but there's also AI Impacts' recent (2018) work which argued against a hard takeoff. There's also healthy skepticism that AI takeoffs should function this way, articulated here by Ben Garfinkel. Perhaps most importantly is the question of the underlying growth model. Tyler Cowen points out that some economists model growth more like a classical Solow model with "catch-up growth" seen in less developed countries which can borrow capital they don't have. On the other hand is an idea of "increasing returns" where ideas (or perhaps technology) is more dominant leading to increasing returns to richer countries.

Mapping this onto AI, one possibility is that the discovery of powerful AI will create a massive positive feedback loop and we quickly jump to the highest productivity level we can reach within our physical limits, perhaps running up against the amount of energy hitting the Earth. Another possibility is that growth accelerates but doesn't hit any physical limitations, somewhat like technology has done so far in humanity's history, or how economic growth is often modeled as exponential growth. Here, physical limitations aren't met, just like we haven't "run out of oil". Markets adjust to scarcity and investment opportunity. Of course, this would require an AI revolution that is much less explosive than for example, Bostrom's Superintelligence suggests is likely. But the bottom line is that we don't have much certainty of what the economics of an AI revolution will look like. So how might we remedy that?

Ultimately, we want to know how to prioritize economic growth as an EA (or more generally a societal) goal. In order to figure that out, we want to try to answer these questions:

- What are the most likely AI timelines/when will certain AI capabilities exist?

- How fast are those timelines once AI exists/will there be explosive growth or more gradual growth as AI takes off?

- How will economic growth up to AI "take off" affect the capabilities of advanced AI?

- Are we measuring growth accurately?

- How will economic growth affect existential risk?

- What can actually be done to promote growth?

Thinking about that first question, ideas that come to mind are tracking how fast AI capabilities have come in the past 20 years, tracking hardware improvements over time, tracking what AI researchers suggest is possible, tracking what AI researchers (like DeepMind) are working on and how long it has taken them to reach those goals in the past. AI timelines is a known question that EAs are already interested in.

The second question is somewhat mysterious to me. Most people in the EA space seem to lean towards a pretty fast takeoff regardless of how we get there. The only good example I've really found of how a takeoff might be slower is related to software vs hardware iteration and "hardware overhangs". If software improvements are few and far between, the cycle of improvement for AIs is about hardware design and production. Computer hardware has gotten incredibly powerful over time, but nonetheless, it takes time to produce new chips even if you have superhuman chip design ability. Updating software is a much tighter loop.

The third question returns to the idea of productivity impacts on technological progress. Earlier I pointed out that economic productivity doesn't guarantee technological progress, but it's certainly possible that economic productivity growth is necessary for technological progress. What if AI needs a lot more economic growth before it becomes powerful? Cloud computing, machine learning research, specialized chip design, all this might not be possible in a world where economic growth is lower than it is today. And if AI is further away than we initially thought, perhaps we need sustained economic growth to reach that level of productivity. This gets more important if AI is the key to unlocking things like life extension or complicated health improvements. The longer we delay economic growth, the more contemporary humans we condemn to die younger. A big loss of productivity could push AI much further out of reach, which could be quite damaging, even if eventual general artificial intelligence is created a hundred years later.

The fourth question harkens back to points I've made about GDP measurement. If new technology is invented with no current substitute, it is almost impossible to measure a great deal of the value it provides to consumers for free. It's thus plausible that technological acceleration could be happening, but official GDP numbers don't reflect that reality. The goal of tracking technological progress may require separate efforts from tracking general GDP. Or perhaps if technological progress is accelerating, we need to adapt our productivity measures to better reflect those changes, or indeed change what we measure from GDP to something like Tyler Cowen's "Wealth Plus". He defines it as not just GDP but also "leisure time, household production, and environmental amenities", and also ties it in with sustainability. How we could actually measure this remains understudied.

Finally, before we get to risk, there's the question of tractability: what can we actually do to promote growth? Economic growth is a heavily studied topic. We don't have a slam dunk answer here, which means we should assign a significant probability to the fact that boosting economic growth to 6% or even 4% consistently may not be possible even with correct policy. Existential risk of course has it's own tractability problems, but it's also significantly less studied. Nonetheless, it's also possible that economic growth is limited not by lack of policy knowledge, but incentives inherent in our political structures. For example, American cities have difficulty creating enough housing to meet demand due to the political power wielded by current property owners. If we can't reform that power structure, we may be locked out of unlocking growth from affordable housing regardless of economic policy knowledge. Thus, while I am skeptical there is low hanging fruit to improve economic growth, empirical evidence could change that, which would change the priorities I lay out here.

Existential Risk

X-risk is underfunded enough that simply working on it could have spillover effects that promote both long term growth and AI Alignment.

Tyler Cowen is concerned about the fragility of growth and argues we shouldn't take it for granted. In that way, existential risk is a major problem for sustaining economic growth. Of course, economic growth itself has some relationship to new technologies and as we are keenly aware, new technologies are a major source of existential risk. This makes our overall view of growth quite complicated, although points towards a much more unified overall message: perhaps working to minimize existential risk is both plausibly pro-growth and pro-AI alignment. Certainly one can imagine that better governance and more expertise available to policy makers could improve the prospects of both goals.

Moreover, economic growth and AI alignment research differ vastly in neglectedness. Indeed, almost any person involved in the economy could be said to be part of a move towards continuing economic growth as long as they are willing to buy better products for cheaper (and who isn't). Individual economic actors are thus already following their own incentives which will continue to drive growth. AI alignment needs a much more direct approach, as the risks are imposed on society while the costs are borne by the groups that work on the problem. This also points to existential risk considerations in economic growth: while there are plenty of incentives to grow the economy, a long term view that takes into account far future generations emphasizes the importance of sustainability and Cowen's previously mentioned "Wealth Plus". So again, focusing on neglected aspects of economic growth might end up with a lot of overlap of the neglectedness of AI alignment.

Conclusions

The most definitive point is that existential risk is a major neglected issue, as Effective Altruism circles already largely agree. The methods and sources of economic growth are not as well understood as we might want, and higher growth level's interaction with the progress of technology is important, but also unclear. It also seems unlikely that there is obvious unknown low-hanging fruit where economic growth could suddenly be boosted by several percentage points via unknown policy. If there was, I think this calculation would be more complicated. For this reason, I think we can safely say that existential risk prevention is a better use of current EA resources than pure productivity growth. There is also some overlap where both technological existential risk and economic growth would both benefit from better governance institutions, which is both important and neglected, although not particularly tractable.

Another way to state this is that work on preventing economic collapses seems like a clear positive as any collapse could be a risk factor in other existential risk, whereas significant work on pushing economic growth to be substantially higher than it is currently seems less promising. While neither are very tractable (how do we just make "good governance institutions" anyway?), existential risk work should likely remain a higher EA priority than economic growth as it is both more neglected and less likely to add additional existential risk.

Picture Credit: Max Roser (2013) - "Economic Growth". Published online at OurWorldInData.org. Retrieved from: 'https://ourworldindata.org/economic-growth' [Online Resource], Licensed under CC BY 4.0, original data from Maddison Project Database